- Home

- CD With AWS ECS And CodePipeline Using Terraform – Easy Steps

Triotech aspires to encourage the IT service industry by providing precise execution and technical brilliance in this digital world.

Quick Links

Company Address

Address: 150 King Street West Suite 392 Toronto, Ontario M5H 1J9

Phone: +1 403437-9549

Email: [email protected]

Copyright © Triotech Systems 2024 all rights reserved

CD With AWS ECS And CodePipeline Using Terraform – Easy Steps

Achieving smooth and reliable deployment processes is crucial. This article explores the integration of AWS Elastic Container Service (ECS) with AWS CodePipeline using Terraform. By automating the Continuous Deployment (CD) pipeline, developers can streamline the release of containerized applications, ensuring efficiency and reliability. So, let’s begin!

STEP 1: Establishing IAM Role and CodeCommit Credentials

Create ECS Task Service Role:

Establish a dedicated IAM role for ECS tasks, enabling them to execute AWS service requests on your behalf.

Attach CodeCommit Access Policy:

Enhance your USER credentials by assigning the AWSCodeCommitPowerUser Policy, ensuring the necessary permissions for optimal AWS CodeCommit utilization.

Generate Git Credentials:

Securely interact with AWS CodeCommit by generating HTTPS Git credentials. These credentials enable essential actions like cloning, pushing, and pulling from the designated CodeCommit Repository, ensuring a secure and streamlined code version control process.

STEP 2: Terraform Scripts for Infrastructure Deployment

Leveraging Terraform, we orchestrate the construction of the foundational elements required for our ECS and CodePipeline setup.

Providers Configuration

Ensure Terraform uses the correct AWS provider version and define the AWS provider configuration with the desired profile and region.

VPC Setup

Define the VPC, Subnets, and Internet Gateway resources using Terraform to establish a secure and scalable network infrastructure.

Variables Definition

Specify variables essential for VPC configuration, such as CIDR blocks, availability zones, and subnet IP ranges.

IAM Roles & Policies

Define the IAM roles and policies necessary for CodeBuild, granting access to ECR and S3.

Route Tables

Configure a single route table for both public subnets.

Security Groups

Define security groups for ECS Service and Application Load Balancer.

Application Load Balancer (ALB)

Create an ALB with specified listeners and target groups.

ECS & ECR Configuration

Set up ECS Cluster, ECR Repository, Task Definition, and ECS Service using Terraform.

CI/CD Pipeline Configuration

Define resources and stages for the CodePipeline, integrating CodeCommit, CodeBuild, and ECS deployment.

Data and Outputs

Retrieve and output essential data, such as IAM role information and ALB DNS.

Extra Variables

Specify additional variables like repository name, branch name, build project, and URI repo.

By organizing your infrastructure as code with Terraform, this script automates the deployment and configuration of the essential AWS resources for your ECS and CodePipeline setup.

STEP 3: Implementing a Golang HTTP Server for ECS Tasks

In this step, we create a straightforward Golang HTTP server designed to retrieve the private IP addresses of ECS tasks. The server listens on port 5000, facilitating communication within the ECS cluster.

Explanation:

– The `main` function initializes an HTTP server on port 5000.

– The `handler` function processes incoming requests, printing request details and form data.

– The `GetOutboundIP` function establishes a UDP connection to a known external IP address (`8.8.8.8:80`) to determine the local IP address. This method helps retrieve the private IP address of the ECS task within the cluster.

This Golang code serves as a foundation for obtaining essential information about ECS tasks, facilitating communication and coordination within the ECS cluster.

STEP 4: Dockerizing the Golang HTTP Server

In this step, we containerize the Golang HTTP server using a Dockerfile. The resulting Docker image will encapsulate the HTTP server application, making it portable and deployable across different environments.

Explanation:

– The Dockerfile employs a multi-stage build. The first stage (`builder`) builds the Golang HTTP server binary.

– The second stage creates a minimalistic image (`scratch`) that only includes the compiled HTTP server binary.

– The `EXPOSE 5000` instruction informs Docker that the application will listen on port 5000.

– The `ENTRYPOINT` specifies the command to run when the container starts, launching the Golang HTTP server.

This Dockerfile serves as a blueprint for packaging the Golang HTTP server into a Docker image, streamlining deployment and ensuring consistency across different environments.

STEP 05: Make The TF_VAR

Create a file named terraform.tfvars in the same directory as your Terraform scripts.

Open terraform.tfvars and set the values for your variables. Here’s an example based on the provided Terraform script:

Replace <YOUR_URI_REPO_VALUE> with the actual value for your URI repo. Terraform will use this file to set the values of these variables during the execution.

When you run your Terraform commands (e.g., terraform apply), Terraform will automatically read the values from terraform. tfvars.

STEP 06: Infrastructure Deployment Process

Execute the following commands to initialize, validate, plan, and apply the Terraform configuration for creating the infrastructure:

Upon completion of the infrastructure creation, retrieve the outputs using `terraform output` to obtain essential information about the deployed resources.

STEP 07: Uploading Files to CodeCommit Repository

Follow these steps to upload the Dockerfile, buildspect.yml, and Golang code to the CodeCommit repository:

1. Clone the Repository:

2. Copy Files to the Repository Folder:

Copy the buildspect.yml, Dockerfile, and Golang code to the cloned repository folder.

3. Commit the Changes:

4. Push Changes to CodeCommit Repository:

Ensure that you replace `<CodeCommit_Repository_URL>` with the actual URL of your CodeCommit repository. This sequence of commands adds your files to the repository, commits the changes, and pushes them to the CodeCommit repository for version control.

STEP 08: Verify the Pipeline

After the completion of the “Build” stage, inspect the Docker image within the ECR repository to ensure successful image creation and validation.

STEP 09: Monitor the ECS Service

Once the “Deploy” stage concludes, monitor the ECS Service to confirm the successful deployment. Check the ECS Tasks to ensure they are running as expected.

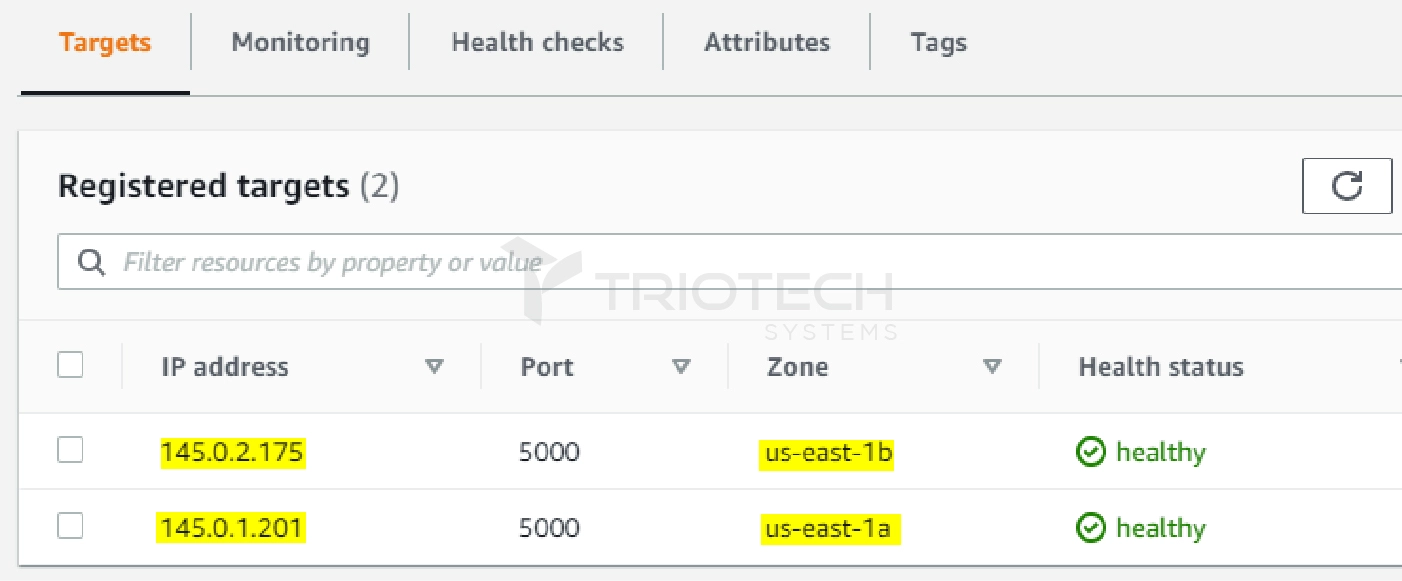

STEP 10: Validate the Target Group

Following the completion of the deployment, inspect the Target Group to verify that the ECS Service has been correctly registered and is actively serving traffic.

STEP 11: Validate Application Load Balancer Operations

After the deployment stages are complete, confirm the proper functioning of the Application Load Balancer (ALB). Verify that the ALB is efficiently distributing incoming traffic to the ECS tasks, ensuring seamless operation.

Conclusion

In this guide, we’ve successfully demystified the integration of AWS services, Terraform, and CodePipeline for a streamlined containerized workflow with Amazon ECS. By establishing a robust infrastructure and implementing CI/CD practices, you’ve gained valuable insights into optimizing development and deployment processes.

Improve your cloud experience with Triotech Systems. Our tailored solutions and expertise ensure efficient AWS infrastructure management. Contact us today to discover how Triotech Systems can propel your development cycles to new heights.

FAQs

Amazon Elastic Container Service (ECS) simplifies the deployment and management of containerized applications on AWS. It allows you to run Docker containers without managing the underlying infrastructure, making it an ideal choice for scalable and flexible container orchestration.

Terraform is an Infrastructure as Code (IaC) tool that enables the automated provisioning of cloud resources. With Terraform, users can define and manage infrastructure in a declarative configuration file, promoting consistency and reliability in AWS deployments.

AWS CodePipeline automates the end-to-end release process, from source code changes to deployment. Integrating it with AWS ECS streamlines continuous integration and continuous deployment (CI/CD) workflows, ensuring efficient and reliable application delivery.

A Dockerfile is a script used to create a Docker image. In the context of ECS, it defines the configuration for building a container image that runs your application. This file is crucial for creating consistent and reproducible container environments.

After deploying tasks on ECS, AWS provides tools like Amazon CloudWatch for monitoring and AWS CloudTrail for auditing. By leveraging these services, you can track performance metrics, set up alarms, and troubleshoot issues effectively in your ECS environment.

Recent Posts

What Is Data Management? Step-By-Step Guide For Installation.

What Is Data Management? Data management is collecting, organizing, protecting, and analyzing

What Is Application Security Testing (AST)? 5 Types Of AST Solutions

What Is Application Security Testing (AST)? Application Security Testing, abbreviated AST, is

Types of Application Security

What Is Application Security? Application security helps protect data and software from

Hybrid VS Multi-Cloud Computing: The Dominant Difference

What Is A Multi-Cloud? Organizations can select the best-in-class services from different

What is Cloud Computing?An Overview of the Cloud.

What Is Cloud Computing In Simple Terms? In recent years, Cloud Computing

Microservices Monitoring and Observability: Tools and Techniques

Understanding Microservices: Microservices are an architectural approach for designing software systems that

Artificial Intelligence (AI) in DevOps: Enhancing Automation and Efficiency

How Artificial Intelligence (AI) is Enhancing Automation: Artificial Intelligence (AI) transforms automation

Why Cybersecurity Matters for SMBs: From Challenges to Measures

Why Cybersecurity Matters for SMBs: From Challenges to MeasuresAs cyber threats evolve

Why SMBs Should Outsource DevOps Expertise For Faster Growth?

Why SMBs Should Outsource DevOps Expertise For Faster Growth?If you want to

Terraform Cloud AWS Dynamic Credentials – Infrastructure Automation

Terraform Cloud AWS Dynamic Credentials - Infrastructure AutomationSecuring your infrastructure automation is

Recent Posts